Hi!

I’m Parth, and this is the public component of my second brain.

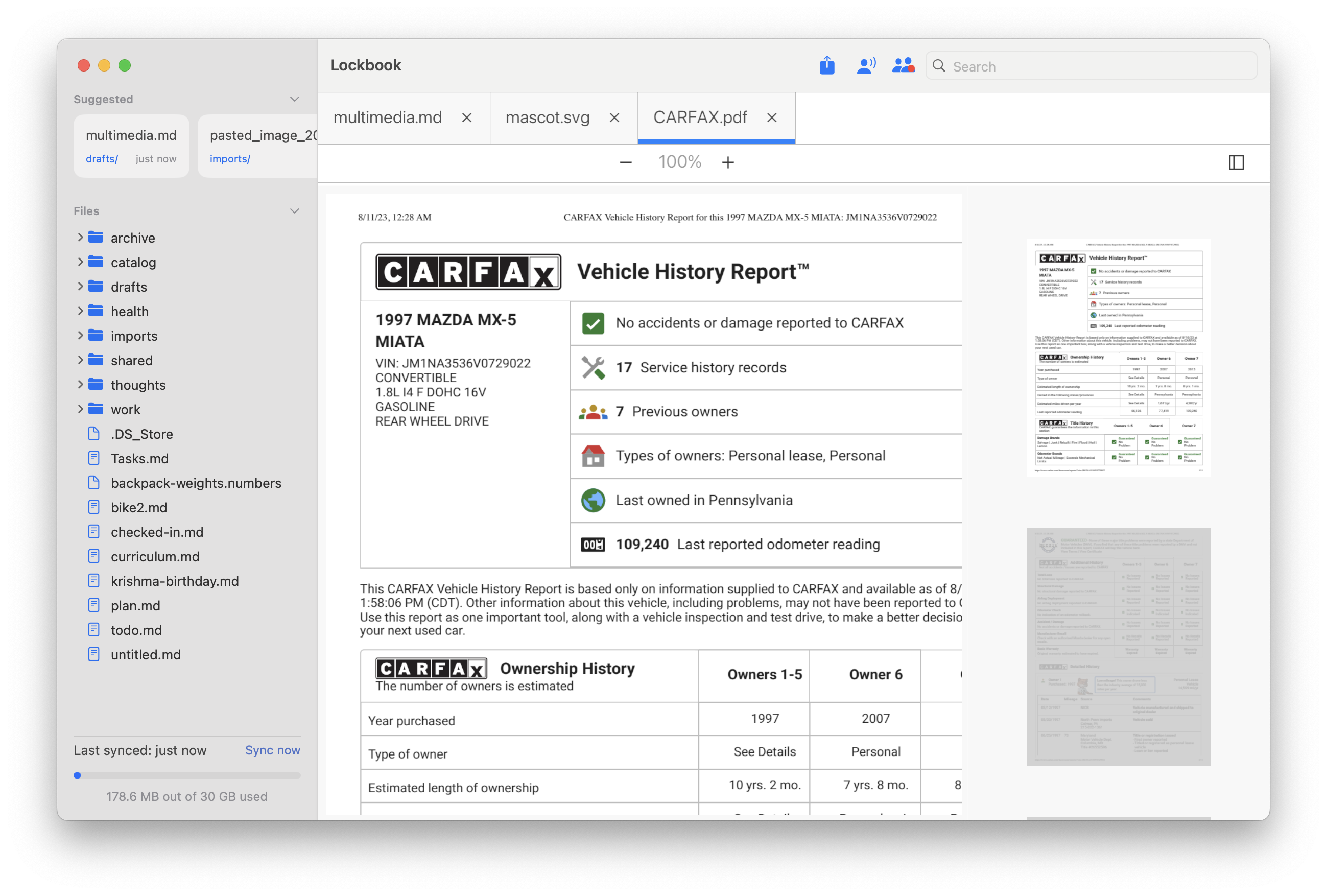

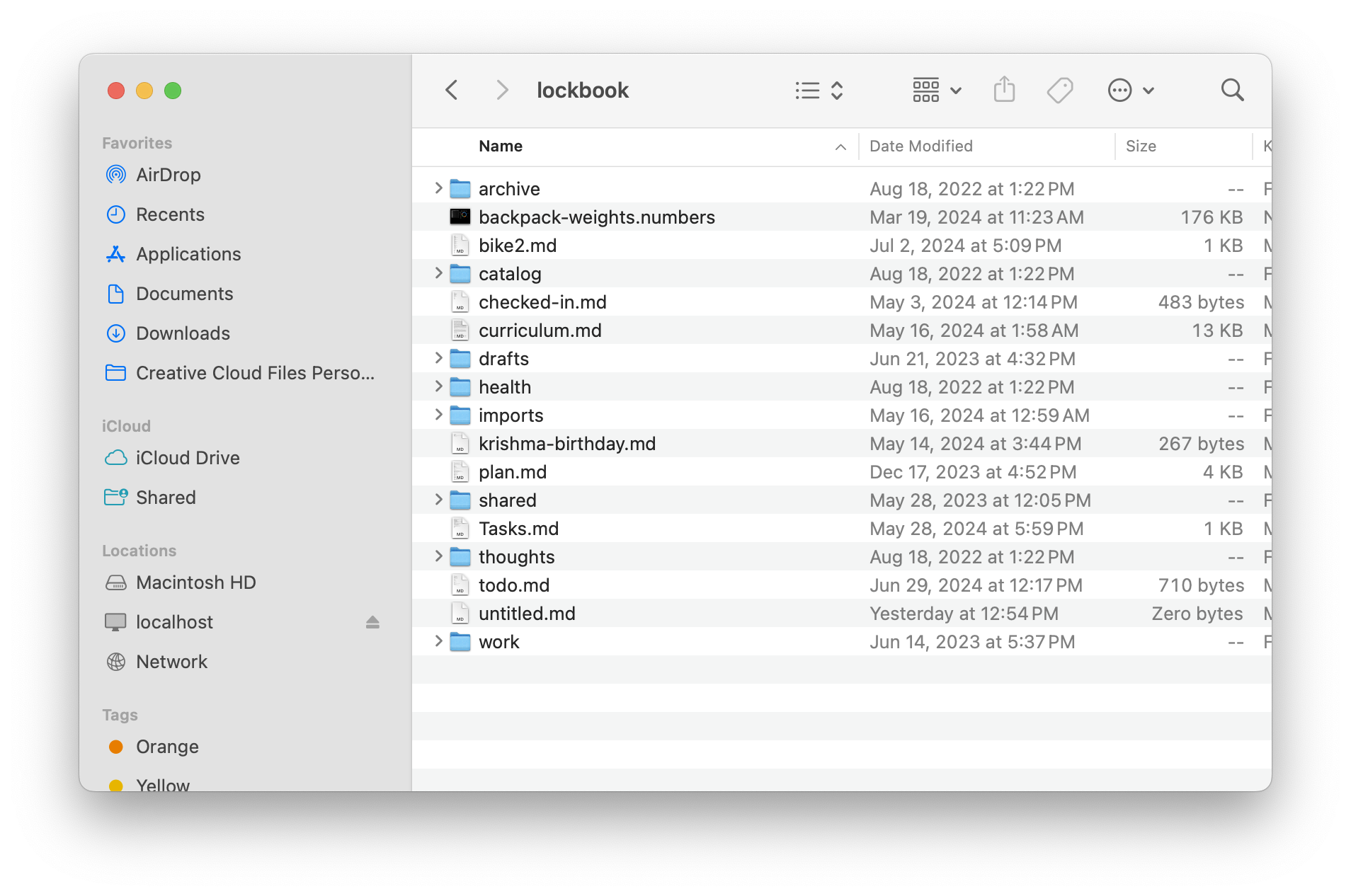

I’m a programmer with many interests, but I’m primarily working on my startup Lockbook.

In the General Programming section you can find some abstract ideas about building products, my latest position various programming topics, and other areas of exploration.

Lockbook is secure note taking platform. If you’d like to learn more about it checkout our website. There you can learn more about the product, see some live demos, and dive deeper into Lockbook’s product documentation. The Lockbook section here captures some of the aspects of the project I’m most proud to have contributed.

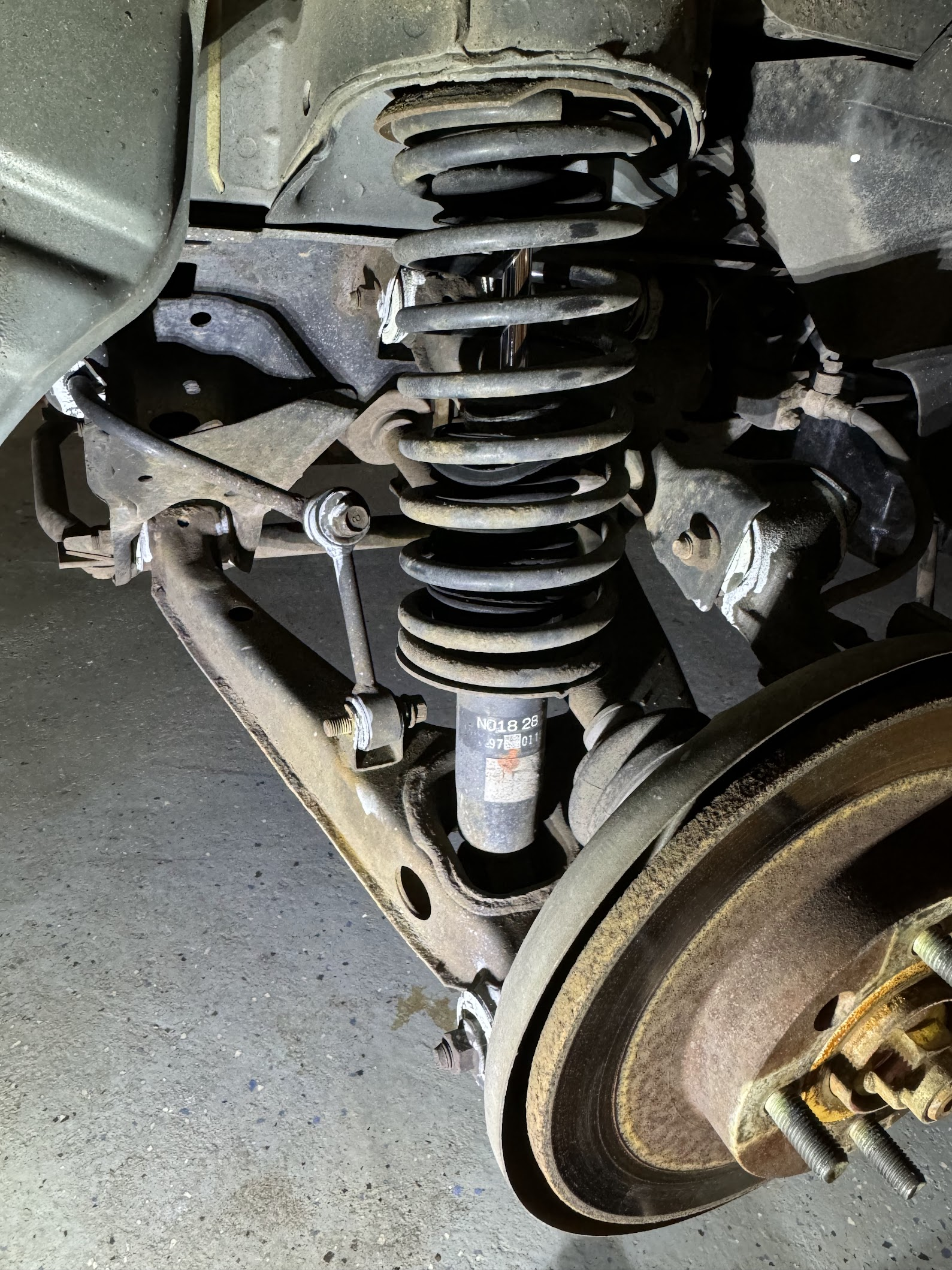

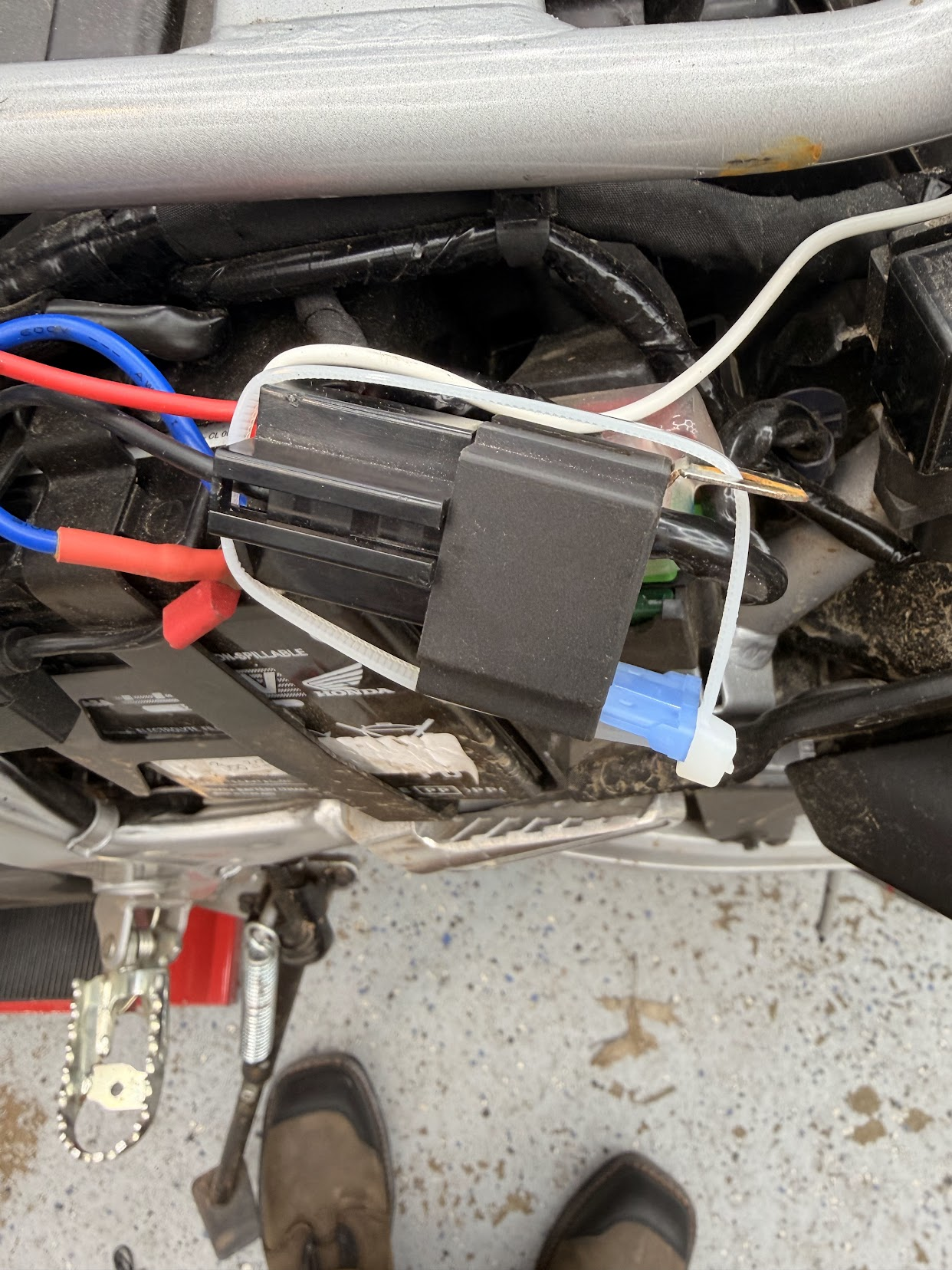

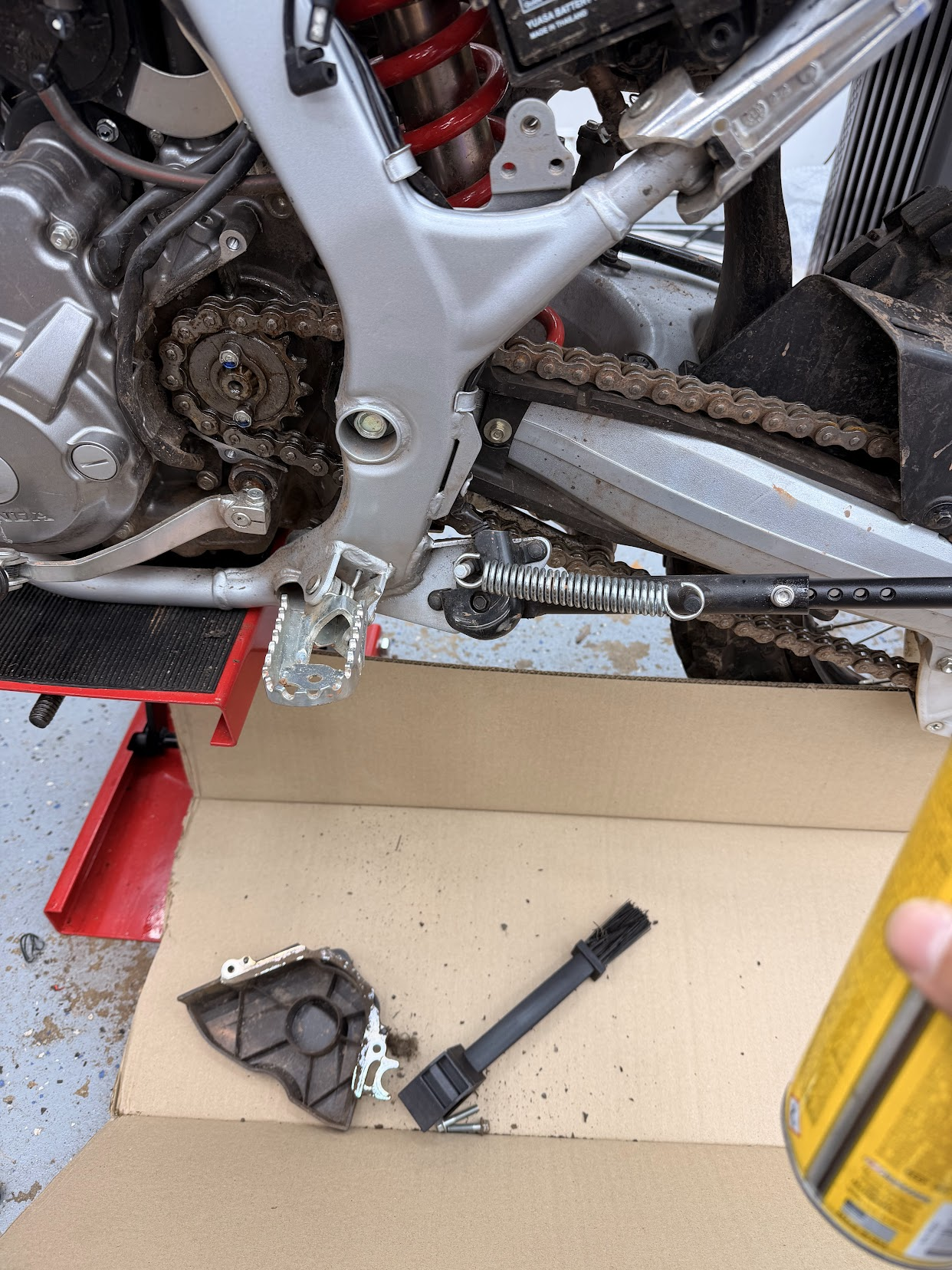

I like working with my hands, the wrenching section catalogues my journey with various motorcycles, my project miata, and various other physical world pursuits.

Finally Life Tracking is a place I can share things like what books I’m reading, how I organize my spaces, and anything else that’s captured my interest.

Some handy links:

This whole site is literally just a folder in my Lockbook that I edit as I experience existence. A tiny Github Actions script builds an MdBook and publishes it to Github Pages.

How do you see the world

I’ve been giving a lot of thought to the various ways people view problems and solutions, particularly: how does what you spend most of your time doing map to the way you view the world? For example, people who spend a lot of time thinking about marketing and sales may have the following perspective: all the problems and solutions are out there; the hard part is creating a narrative that connects the dots. On the other hand, I would wager that people in finance see things in terms of accurate pricing, forecasting, and resource allocation.

Of course, the thing that influences your perspective doesn’t have to be tied to your profession (or any profession for that matter), but it likely does need to be tied to whatever you’re putting your 10,000 hours into. I think this starts to happen somewhere around the time you consider the thing to be a central part of your identity. It’s likely tied to the point where you start to form your own opinions using expertise and specific knowledge that other people don’t have. At this point, you’re in a position to contribute something novel back, hopefully something of value.

Personally, I’m very interested in putting my 10,000 hours towards advancing the state of technology. I find advancing technology (particularly writing software) to be intrinsically rewarding, I’d happily do this in a vacuum. However we’re not a vacuum, and while every problem is not technical in nature, I see many that are.

Now, I haven’t crossed hour 10,000 yet, but I’m ready to start sharing my ideas and the way I think about certain things. I’ve learned a lot so far and I’ve formed a more clear idea of the type of technology I’m interested in advancing: technology that empowers the individual.

I want to use this blog to detail the things I’m exploring, share what I value and the potential I see in the future.

Ideation

Over the last 10 years I’ve gone through the process of ideation, execution and delivery countless times. I’d like to share some lessons I’ve learned and how I think about projects today.

The Idea

It’s hard to evaluate how important an idea is relative to execution and presentation.

I think ideas are a dime a dozen, and most projects fail not because the idea was bad, but because an attempt was never made.

However, you should expect good ideas to be rare. I like using challenge oriented thinking to filter out bad ideas:

First filter: does this exist already? It may exist, and be popular, and maybe you just give that product or service a try. Maybe it doesn’t exist because it’s a foolish idea that would never work. Or maybe it doesn’t exist because no one’s made it yet. If you don’t make stuff, there is no stuff. Perhaps bringing this thing into existence is your calling.

Maybe your idea is out there already, you give it a try and have a bad experience. When you’re ready to give up on this product, you wonder to yourself: “Can I do a better job?”. You have to evaluate how much your execution edge is. Do you have the expertise and resources to do this better? Have you overlooked something that makes this very hard? Peter Thiel’s Zero to One explores this idea in a bit more depth. In short he provides the following intuition: unless your execution is orders of magnitude better than your competition you’re better of seeking novel ideas that have a higher likelihood of earning you a monopoly.

Once this “market research” filter has been overcome, I look to gain perspective from people I respect. Traits I look for in people to discuss ideas with: generally capable people, well-read, and open minded. I want objective feedback, but I’m not too interested in knee-jerk reactions. My favorite conversations are ones where we try to build up the idea then see if we can tear it apart.

After this, I just let the idea sit in a document next to other ideas. Unless there’s a unique opportunity, I’m primarily interested in working on things that would help me in some way. Once it lives in my notebook alongside other ideas, whenever I next feel the need for this I’ll make a note. As I’m going through life, I’ll put all associated thoughts into this document. My time is limited and this gives me an organic way for my idea to mature and allows me to filter out ideas that seemed interesting in the moment but wouldn’t really provide me or anyone any real value.

By the time I sit down to start executing on an idea, I’m usually fed up with whatever the stop gap I’ve been using is, and I’m in a mental state where I don’t really care if other people will use this thing I want it to exist for me. In my experience this makes the process of willing the idea into existence a transcendent experience. You’ll understand your needs best and stop over-prioritizing the opinion of other people. The basis for a good MVP is the product you would use, not the hackathon power point presentation you’re trying to pass off as your product.

Once you have a core product you’re proud of then you can consider if you want to solicit Lean Startup style feedback from the market and your customers or if you want to continue to take a Job’s style visionary approach to your product.

System Design

When I first started exploring programming I wasn’t sure how anything was made. As I learned about the various components of a stack, I started to form a clearer picture of the ways people design systems.

For instance, when I learned about if statements and strings, I understood how people validated forms. And when I learned how to process stdin and output to stdout I understood how something like grep is made. I didn’t necessarily understand how to write a regex parser, but I had a good sense of how CLIs come together.

As my understanding matured, I had a sense about why certain designs were successful as well as what we may expect from system design in the future. I’d like to share a snapshot of my current understanding.

When I’m trying to conceptualize the solution to a problem, I start by trying to figure out what shape my work will take. Usually, this is one of the following:

- Self-standing executable

- Classic web app

- Complex web app

- Novel architecture

Self Standing executable

A self-standing executable is a piece of software that doesn’t have critical external dependencies.

This generally takes the shape of:

- a static site (like this one)

- a mobile app

- a CLI

- a library

- a bot

- some games

These projects are the building blocks of our computing infrastructure. They take a look at a very specific problem and attempt to solve it excellently. There are some unique aspects of such projects:

Software in this category has particularly low maintenance costs. If the solution requires you to maintain a server, it vastly diminishes the long-term survivability of a project. For instance, CLI’s (like grep or vim) or libraries (like openssl or TensorFlow) don’t require the upkeep of centralized services. While they may evolve and improve, you can probably use a variant from 10 years ago without any issue. And you can probably use that same variant 10 years from now. I would be surprised if the Skype client from 5 years ago worked properly today. It certainly wouldn’t work if Skype’s servers went offline. On the other hand, how would you go about “killing” vim, openssl, and similar projects?

Because you don’t have to worry about things like maintaining servers, you also don’t need to worry about scaling. Static sites are distributed via CDNs, CLIs via package managers, and apps in the App Store. Depending on the idea, you expose yourself to the possibility that your application is virally adopted.

Finally, your ability to prove that your implementation is correct dramatically decreases with the amount of code involved. I’m far more confident in my ability to play audio files locally, than my ability to stream music to my Bluetooth speaker. Consider the difference in the amount of code at play for those examples. Doing things locally, simply, and efficiently reduces the surface area of possible problems.

I think there’s a temptation here to monetize this sort of application. This could look something like making people sign up for accounts when that provides no benefit to the experience, or serving them ads. What you sacrifice for doing such things is the possibility for your creation to be widely adopted by all sorts of people across a large period. I doubt git would have reached where it is today if you needed to create an account on Linus Torvald’s website. Linus Torvalds is living a very comfortable life. I think it’s in your best interest to remove as many barriers to mass adoption and create the best product you can. If you do a good job, you’ll be okay.

Classic web app

For a solution to fall into this category it usually necessitates that users create accounts with a centralized service to solve their problem. For instance, social networks, messengers, and banks fundamentally depend on their ability to authenticate users.

These sort of services generally involve:

- Frontend clients: websites, mobile apps, CLI’s

- API Servers: stateless, horizontally scalable

- Store of state: some DB which will depend on the type of data and how you access it

There are several technical challenges the come from these additional moving parts. But at this stage, the patterns are still pretty well explored. At some point, your website may become slow because you’re getting too much traffic. You’ll have to transition from 1 API server to many API servers, you’ll likely have to use some sort of a load balancer. It’ll be tricky for sure, but lots of people have explored this before you and there shouldn’t be too many unknowns after you consume the right resources and ask the right questions.

The most common mistake in this realm is creating un-needed complexity. This could be a premature optimization (involving caches like Redis too early), or could involve splitting their API layer into “microservices”. I’ll explore this particular mistake in a future post, but the main idea I’d like to stress is: be skeptical of additions to your architecture that stray too far from this model.

Complex web app

Scaling a classic web app is straightforward because it’s known and generally involves adding/upgrading hardware. But sometimes this isn’t enough, and your performance needs require you to rethink your architecture. Generally, this happens because the time it takes for various computers to talk to each other introduces too much latency, or because you can’t mutate your state (if it’s stored in a DB) quickly enough. Generally what you’ll end up doing is taking the component that’s proving difficult to scale and try to solve the problem with a single computer. Keeping state in RAM instead of on disk, and communicating via function calls rather than network requests.

Let’s consider an example: popular games like Dota, Fortnight, Overwatch have to worry about matchmaking, running games, managing item ownership, and processing payments. Everything apart from administering the game logic can likely be engineered as a classic web app. But managing the state of the game (where players are, who did what, who’s winning) has tight real-time requirements. By creating a “stateful node” you may be able to meet these real-time requirements, but you have likely created a new set of problems.

Even though you’ve solved the performance issues that were caused by a database, it’s still not clear how you would scale this stateful node to thousands of users. You’ll likely need some sort of flexible infrastructure that will let you spin up these game servers on demand.

It’s also not obvious how you would achieve high availability. What happens when you want to update your stateful node? In the example of the game, this isn’t a significant problem: games are short-lived, new games can start on new versions of the code. However, how do you go about updating the matching engine of an exchange? You can take downtime and lose money, or you invest significant engineering resources to design a matching engine that operates and shares state with multiple nodes and can be upgraded incrementally.

Making this component fault-tolerant can be a non-trivial task as well. In a classic web app, if one node fails you can fall back to the other ones, there was no state in that node, so nothing of importance was lost. However, if your game server experiences a failure, those players likely just disconnected (and are very upset).

This existence of a “stateful node” is just one type of architectural element that turns a “Classic” web app into a “Complex” one. Others can include cron jobs, data pipelines, or specialized hardware. Creating a specialized service that performs well, is highly available and fault-tolerant will likely require a lot of domain-specific knowledge. You won’t find a community or textbooks full of answers. You’ll likely just have to try several things and learn from experience. Every success, however, becomes a technical edge.

Novel architecture

Sometimes your problem domain imposes a strong constraint that requires something that hasn’t been seen before.

Decentralized projects like Bitcoin, Bittorrent, and Tor are worth studying. All these projects are relatively old, Bitcoin is 10+ years old at the time of writing. The solution that Bitcoin promises: store of value, payment system, created outside the cathedral of big banks and big government had been tried many times before. But due to the way previous attempts were designed, they were shut down. Similarly, early file-sharing apps that were used to pirate music were trivially shut down as well. The problems these tools are trying to solve remained largely unsolved until their decentralized variants emerged.

Evolution of design

Today’s systems look this way because of our prior expectations from our computing infrastructure, as well as the software and hardware limitations of the previous generation of softare.

For instance, it’s hard to create a P2P application because of the NAT tables IPv4 necessitates. Addressing devices that don’t have fixed IP addresses (all consumer devices) is difficult and requires workarounds like STUN and TURN. New technologies on the horizon as well as an increased appetite for decentralized technologies could lead to a proliferation of P2P applications.

To me, the intersection of our shifting desires (performance, censorship resistance, privacy, etc) and new technologies on the horizon is one of the most exciting areas of our craft and that is why I think these are some of the most valuable problems to work on.

Microservices FAQ

The world is too eager to use microservices. Motivations for splitting a program out into independent servers are on shaky ground. This results in needlessly lower productivity and lower quality software.

What is a microservice architecture?

An architecture in which several networked small (micro) programs (services) communicate to perform a task. For example, a streaming service may have independent services for Accounts, Recommendations, Billing and Streaming.

What is a monolith?

A single program which contains all the logic to perform a task. For example, a streaming service may have a monolith with a route for /accounts, /recomendations, /billing and /streaming.

What is strong evidence something should be a microservice?

When the hardware requirements or performance characteristics of your program deviate significantly from your monolith. If your monolith is a traditional, statless, horizontally scalable, REST API and you require the ability to spin up n number of stateful game servers for each group of 10 people playing your game, you should probably spin those games up as independent services.

What is the productivity cost of an unnecessary microservice?

Reasoning about a distributed system is hard. If you needlessly split up your operations into independent services each with their own database you’re handicapping your ability to make illegal states unlikely or impossible.

You’re also usually taking calls that would be simple function calls checked by the compiler and turning them into network requests. In the best case this forces you to use tools like grpc, protobuffers. In the worst case this reduces the reliability of your operations. In either case you’re going to spend much more time reasoning about how to roll out breaking changes to a distributed system that would otherwise be light refactors.

Infrastructure related productivity abstractions are hard. Your org will likely want a suite of support tools for each service, these can include: monitoring, load-balancing, logging, alerting, etc. You’re forcing your company into one of 3 outcomes:

- hire more operations staff

- lock yourself into a cloud ecosystem

- adopt tool(s) like Terraform, Ansible, and other SRE orchestration software.

Is a monolith harder to scale?

No, you can run n replicas of your monolith to achieve the same scale characteristics as your microservices.

What if I want to independently scale different portions of my app?

You can configure a reverse proxy to only send traffic from a particular route to a particular node, achieving the same flexibility.

Isn’t it about a separation of concern?

You should organize code to separate concerns. Your API framework probably supports the idea of routes, your language probably supports modules, packages or libraries.

Isn’t a microservice architecture more resiliant to failures?

Most architectures have several single points that if failed would result in a total system outage. Generally this is your database. Reasoning about multiple databases is usually not worthwhile and causes more outages than it prevents. More often than not the components of a microservice depend on each other, and there are very few “unimportant” components. In the streaming example, it’s very likely that lots of interactions will cause you to engage with the Account service to see if a user exists, or the Billing service to see if they’re allowed to watch something. If there’s a significant defect in any major component, it’s usually a total outage for most customers.

Microservices don’t reduce the need for strong engineering processes that would catch defects (like docs, tests, pr reviews, and other forms of QA).

Microservices allows team A to work indepenedently of team B

This is usually an organizational problem microservices is hiding.

If team A and team B are working on independent components then each team should be able to make changes to their portion of the monolith and deploy whenever they’d like.

If the two teams are consuming each other’s services, then they still need to give the same level of attention to breaking changes. And similar analogues apply whether that interaction is happening over the network or via a function call.

Microservices allow my team to deploy more often

The only reason your company shouldn’t deploy code that’s gone through all the QA processes is if the service is stateful and no level of downtime is permissible due to hard engineering constraints. If you’re unable to deploy a stateless rest api without downtime, that should almost certainly become a high engineering priority, and this isn’t a good reason to build a microservice in the meantime.

There are services that are too risky to update

Services that are too risky to update are probably too risky to exist. Microservices here are a bandaid on a problem to which the solution is better engineering processes.

Microservices allow my company to use different languages

Using different languages has a high cognitive cost, and has some huge missed opportunities for engineering culture.

Different teams have different priorities, the wallet team at a crypto exchange may care about security, while the exchange team may care about performance.

Having these ideas expressed in a single language allows junior engineers to understand contrasting values more clearly. It promotes cross-team collaboration and stewardship rather than silos of ownership.

Using a single language allows teams to share code, learnings, and expertise across the company.

There should be incredibly tangible reasons for deviating from an organizations primary language, like the availability of specific tooling that delivers a massive ROI, or the infeasibility of sharing a language across two dramatically different modalities of thinking (frontend / backend and the cost of novel solutions is unacceptable).

More often than not, however multiple languages without strong reasons indicates to me a failure of engineering leadership to define clear values.

A new note-taking app

Many moons ago my friends and I found ourselves frustrated with our computing experience. Our most important tools: our messengers, our note-taking apps, and our file storage all seemed to leave us with the same bitter taste in our mouths. Most of the mainstream solutions were trapped inside a browser, the epitome of sacrificing quality for the lowest common denominator. They didn’t pass muster for basic security and privacy concerns. Building on top of these systems was a painful experience. We were also growing concerned that we did not share the same ethics and values as the large companies running these platforms.

So we ventured out into the land of FOSS. We built our systems from the ground up and experimented with self-hosted solutions. While we learned a lot from this experience, these solutions didn’t stand the test of time. As we brought our friends into this world, we found ourselves constantly apologizing for the sub-par experience. It felt like we solved some of our problems, but made other ones (UX) worse.

At this point, we took a step back. I knew we could do better. The apps we were being critical of weren’t some of the fresher ideas in computing. They are things that we’ve been doing for decades. Why don’t these products feel more mature? What should software that’s been around this long feel like?

What is ideal?

Software that’s been around this long shouldn’t be trapped in the browser. A browser is a convenient place for the discovery of new information, it’s not the place I want to visit for heavily used, critical, applications. When I look at my devices, whether on my iPhone or my Linux laptop, the apps I can use with the least friction are simple, native applications. They have the largest context about the device I’m using encoded into the application. This friction-free experience is why people reach for Apple Notes on their iPhones. And when they open those same notes on their iPad they find rich support for their Apple Pencil. For me, a minimal, friction-free context-aware experience is more valuable than feature richness.

Whatever experience I have on one device should carry over seamlessly to any device I may end up owning in the future. My notes shouldn’t be trapped on Apple Devices should I want to transition to Linux. Very few actions should require a network connection, and any network interactions should be deferrable so people outside of metropolitan areas don’t have a poor experience.

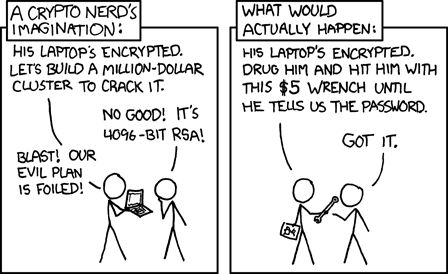

For now, likely most of these services will have to interact with some sort of backend. Everything that backend receives should be encrypted by the customer themselves, in a manner that nobody besides them and the people they give access to can see that content. We shouldn’t ask the customer for any information the service doesn’t require. There is very little reason that a user must provide an email address or a phone number to use a note-taking app. This level of security and privacy shouldn’t cost the user anything in terms of quality. Our customers may be whistle-blowers, journalists, or citizens living under oppressive regimes. They simply cannot afford to trust and they shouldn’t have to.

This software is too important to not open source. Any software claiming to be secure needs to be open source to prove that claim. Sensitive customers need the ability to build minimal clients with small dependency trees from sources on secure systems. Open-sourcing components like your server signal to the world that they can host critical infrastructure themselves, even if the people behind the product lose the will to keep the lights on. Open source doesn’t end in making the source code available, this software should be built out in the open with help from an enthusiastic community. People should be able to extend the tools for fun or profit with minimal friction.

Reaching for an ideal

After much discussion, we decided that the best place to start was a note-taking app. We felt it was the product category with the largest room for growth. Architecturally it also paves the way for us to tackle storing files. And so began the three-year-long journey to create Lockbook a body of work I’m proud to say has stayed true to the vision outlined above. At the moment, Lockbook is not quite ready for adoption as it’s in the early stages of alpha testing. But I’d like to use this space to share updates on our progress as well as document how we overcame some interesting engineering challenges like:

- Productively maintaining several native apps with a small team

- How we create rich non-web cross-platform UI elements.

- How we leverage a powerful computer to find bugs.

If you’d like to learn more about Lockbook you can:

If you’d like to take an early look at Lockbook, we’re available on all platforms.

- Github Releases

- Apple App Store

- Google Play

- Brew

- AUR

- Snap

Why Lockbook chose Rust

Lockbook began it’s journey as a bash script. As it started to evolve into something more serious, one of our earliest challenges was identifying a UI framework we were willing to bet on. As we explored, we were weighing things like UI quality, developer experience, language selections, and so on.

Our choice of UI framework had implications for our server as well. If we chose JavaFX and native Android, we would likely want to choose a JVM-based language for our server to share as much code as possible.

As we wrote and re-wrote our application, we discovered that most of our effort, even on our clients, was not front-end code. When we were implementing user management, billing, file operations, collaboration, compression, and encryption, the lion’s share of the work was around traditional backend-oriented tasks. Things like data modeling, error propagation, managing complex logic, handling database interactions, and writing tests were where we were spending most of our time. Many of these things had to take place on our clients because all our user data is end-to-end-encrypted. Additionally, some of these operations were sensitive to slight differences in implementation. If your encryption and decryption are subtly different across two different clients, your file may be unreadable.

It was also becoming clear to us that the applications that looked and felt the best to us were created in that platform’s native UI framework. So our initial investigation around UI frameworks morphed into an inquiry into what the best repository for business logic was. Ideally, this repository would give us great tools for writing complex business logic and would be ultimately portable.

Tools for managing complexity

Our collective experience made us gravitate towards a particular spirit. At Gemini, Raayan and I saw how productive we were within a foreign, large-scale, Scala codebase. Informed by the experience we were looking for a language with an expressive, robust type system.

A “robust type system” goes beyond what you’d find in languages like Java, Python, or Go. We were looking for type systems where null or nil were the exception, rather than the norm. We want it to be apparent when a function could return an error, or an empty value, and have ergonomic ways to handle those scenarios.

We wanted to have sophisticated interactions with things like enums, specifically, we wanted to be able to model the idea of exhaustivity. When an idea we were working with evolved to have more cases we wanted our compiler to guide us to all the locations that need to be updated.

There were a handful of other features we were looking for which can broadly be categorized into two similar ideas:

We wanted to express as much as we could in our primary programming language. Things that would traditionally be documented (this fn will return null in this situation) or things that would be expressed in configuration (TLS configuration handled by a different program in YAML) would ideally be expressed in a language that contributors understood intimately. Ideally in a language where the compiler was providing strong guarantees against mistakes and misuse.

We wanted our language and tools to help us detect defects as early as possible in the development lifecycle. Most software developers are used to trying to capture defects at test time, but we found that trying to capture defects even earlier, at compile time, allowed us to drop into flow more easily. The following is our preference for when we’d like to catch defects:

- at compile time

- test time

- startup time

- pr time

- internal test time

- by a customer

Our strongest contenders for languages here were Rust, Haskell, and Scala.

Ultra-portability

Ideally, this repository would not place constraints on where it could be used. If our repository was in Scala, for instance, we’d be able to use it on Desktop, our Server, and Android, but we’d run into problems on Apple devices.

We could use something like JS, virtually every platform has a way to invoke some sort of WebView which allows you to execute JS. But we’d had plenty of bad experiences with vanilla javascript. We found that evolutions on JS like Typescript were also on a shaky foundation. Despite the JS ecosystem being popular and old, it didn’t feel very mature. Finally, we didn’t like the way most js-based applications, whether Electron or React Native felt.

Both JS and Scala would require tremendous overhead due to the default environments in which they run. We needed something lighter weight than invoking a little browser every time we wanted to call into our core. Our team members were pretty experienced in Golang, and Cgo was an ideal fit for what we were looking for. It would allow us to ship our core as a C library accessible from any programing language we were interested in inter-operating with. There were some concerns we had about the long-term overhead of cgo and garbage collection generally, but those wouldn’t be immediate concerns.

Similarly, Rust had a pretty rich collection of tools for generating C bindings for Rust programs and a pretty mature conceptualization of FFI. Though it wasn’t an immediate criterion we were inspired by the fact that most everything in Rust was a zero-cost abstraction. In that spirit, FFI in Rust would have virtually no additional overhead when compared to a C program. We were also drawn to Cargo which felt like the package manager for a language we were waiting for, particularly useful for our complicated build process.

Our strongest contenders for languages here were Rust, Go, and C.

Taking the plunge

Learning Rust wasn’t a smooth process, but solid documentation helped us overcome the steep learning curve. Every language I’ve learned so far has shaped the way I view programming, it was refreshing to see the interaction of high-level concepts like Iterators, Dynamic Dispatch, and pattern matching discussed alongside their performance implications.

Rust has an interesting approach to memory management: it heavily restricts what you can do with references. In return, it will guarantee all your references are always valid and free of race conditions. It will do this at compile time, without the need for any costly runtime abstraction like Garbage Collection.

Once we were over the learning curve we prototyped the core library we’d been planning, a CLI, and a Server that used it. During a period when many of us were rapidly prototyping many different solutions, this was the one that stood the test of time. Soon after the CLI, a C binding followed, then an iOS and macOS application. Today we have a JNI bindings and an Android app as well. This core library will one day be packaged and documented as the Lockbook SDK allowing you to extend Lockbook from any language (more on this later).

Further personal reflections

You can probably predict what your experience with Rust is going to be based on how you felt about the above two priorities. Rust is an experiment in the highest-level features implemented at no runtime cost. If you feel like the Option<T> is not a useful construct, you’re not likely to appreciate waiting for the compiler. If you don’t mind the latency introduced by garbage collection you’re not going to enjoy wrestling the borrow checker.

I wasn’t specifically seeking out performance, but before Rust, while programming there was always a slight uncertainty about whether I would have to re-write a given component in C, or spend time tuning a garbage collector. In Rust I don’t write everything optimally initially, when I need to, I’ll clone() things or stick them in an Arc<Mutex<T>> to revisit at a later time, but I appreciate that all these artifacts of the productivity vs. performance trade-offs are explicitly present for me to review, rather than implicitly constrained by my development environment.

For our team, learning Rust has certainly been a dynamic in onboarding new contributors. Certainly, we’ve lost contributors who didn’t buy into the ideas and were turned away because of Rust. But we’ve also encountered people who are specifically seeking out Rust projects because they share our excitement. It’s hard to tell what the net impact here is, but as is the case every year: Rust is a a language a lot of people love. Significant Open Source and Commercial entities from Linux to AWS are making permanent investments in Rust.

This excitement does however bring a lot of Junior talent to the ecosystem, subsequently, even though it’s roughly as old as Go, many of Rust’s packages feel like they’re not ready for production. By my estimation, this is because in addition to understanding the subject matter of the package they’re creating a maintainer of a library needs to understand Rust pretty deeply. Additionally, within the Rust ecosystem, some people are optimizing for different things. Some people are optimizing for compile times and binary size, while others are optimizing for static inference and performance, in many cases these are mutually exclusive values.

In some cases, this is a short-term problem features are stabilized, and best practices are identified. In other cases, this is an irreconcilable aspect of the ecosystem that will simply result in lots of packages that are solving the same problem in slightly different ways.

This is something we should expect, as Rust is a language that’s trying to serve all programmers from UI developers to OS designers. And though it may cost me some productivity in the short term while I’m forced to contend with this nuance, in the long term it massively broadens my horizons as a software engineer.

Personally what got me over the steep learning curve is a rare feeling that the knowledge I’m building while learning Rust is a permanent investment in my future, not a trivial detail about a flaw of the tool I’m using. I’m very excited to see where Rust takes us all in the future.

db-rs

The story of how Lockbook created its own database for speed and productivity.

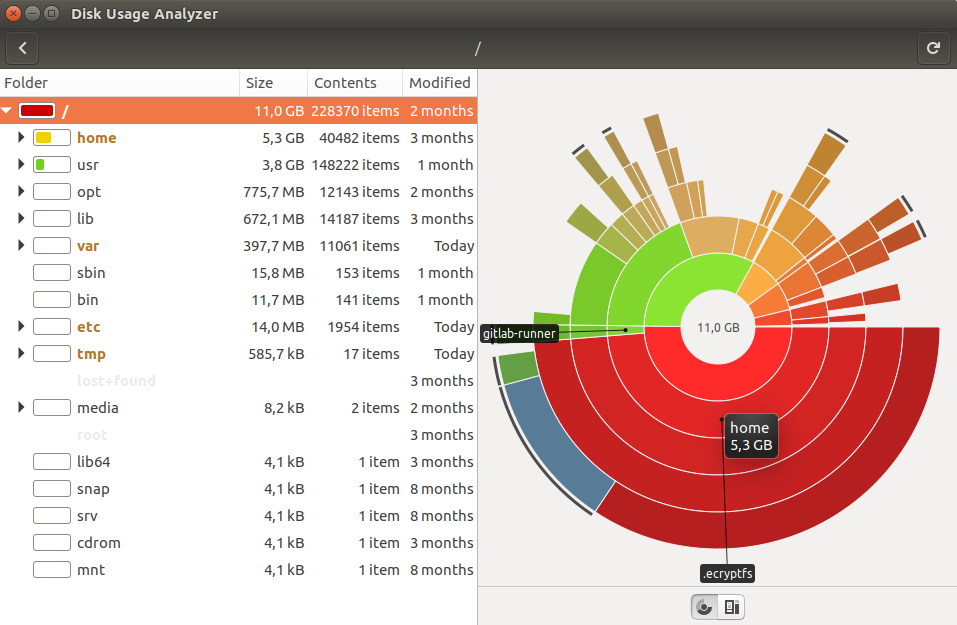

As a backend engineer, the architecture I see used most commonly is a loadbalancer distributing requests to several horizontally scaled API servers. Those API servers are generally talking to one or more stores of state. Lockbook also started this way, we load balanced requests using HAProxy, had a handful of Rust API nodes, and stored our data in Postgres and S3.

A year into the project, we had developed enough of the product that we understood our needs more clearly, but we were still early enough into our journey where we could make breaking changes and run experiments. I had some reservations about this default architecture, and before the team stabilized our API, I wanted to see if we could do better.

My first complaint was about our interactions with SQL. It was annoying to shuffle data back and forth from the fields of our structs into columns of our tables. Over time our SQL queries grew more complicated, and it was hard to express and maintain ideas like a user’s file tree cannot have cycles or a file cannot have the same name as a non-deleted sibling. We were constantly trying to determine whether we should express something in SQL, or read a user’s data into our API server, perform and validate the operation in Rust, and then save the new state of their file tree. Concerns around transaction isolation, consistency, and performance were always hard to reason about. We were growing frusterated because we knew how we want this data to be stored and processed and were burning cycles fighting our declarative environment.

My second complaint was about how much infrastructure we had to manage. While on the topic of Postgres itself, running Postgres at a production scale is not trivial. There’s a great deal of trivia you have to understand to make Postgres work properly with your API servers and your hardware. First we had to understand what features of Postgres our database libraries supported. In our case, that meant evaluating whether we needed to additionally run PGBouncer, Postgres’ connection pooling server, and potentially another piece of infrastructure to manage. Regardless of PGBouncer, configuring Postgres itself requires an understanding of how Postgres interacts with your hardware. From Postgres’ configuration guide:

PostgreSQL ships with a basic configuration tuned for wide compatibility rather than performance. Odds are good the default parameters are very undersized for your system….

That’s just Postgres. Similar complexities existed for S3, HAProxy, and the networking and monitoring considerations of all the nodes mentioned thus far. This was quickly becoming overwhelming, and we hadn’t broken ground on user collaboration, one of our most ambitious features. For a team sufficiently large this may be no big deal. Just hire some ops people to stand up the servers so the software engineers can engineer the software. For our resource-constrained team of 5, this wasn’t going to work. Additionally, when we surveyed the useful work our servers were performing, we knew this level of complexity was unnecessary.

For example, when a user signs up for Lockbook or makes an edit to a file, the actual useful work that our server did to record that information should have taken no more than 2ms. But from our load balancer’s reporting, those requests were taking 50-200ms. We were using all these heavy-weight tools to be able to field lots of concurrent requests without paying any attention to how long those requests were taking. Would we need all this if the requests were fast?

We ran some experiments with Redis and stored files in EBS instead of S3, and the initial results were promising. We expressed all our logic in Rust and vastly increased the amount of code we were able to share with our clients (core). We dramatically reduced our latency, and our app felt noticeably faster. However, most of that request time was spent waiting for Redis to respond over the network (even if we hosted our application and database on the same server). And we were still spending time ferrying information in and out of Redis. I knew something was interesting to explore here.

So after a week of prototyping, I created db-rs. The idea was to make a stupid-simple database that could be embedded as a Rust library directly into our application. No network hops, no context switches, and huge performance gains. Have it be easy for someone to specify a schema in Rust, and allow them to pick what the performance characteristics of these simple key-value style tables would be. This is Core’s schema, for instance:

#![allow(unused)]

fn main() {

#[derive(Schema, Debug)]

pub struct CoreV3 {

pub account: Single<Account>,

pub last_synced: Single<i64>,

pub root: Single<Uuid>,

pub local_metadata: LookupTable<Uuid, SignedFile>,

pub base_metadata: LookupTable<Uuid, SignedFile>,

pub pub_key_lookup: LookupTable<Owner, String>,

pub doc_events: List<DocEvent>,

}

}The types Single, LookupTable, and List are db-rs table types. They are backed by Rust Option, HashMap, or Vec respectively. They capture changes to their data structures, Serialize those changes and append them to the end of a log – one of the fastest ways to persist an event.

The types Account, SignedFile, Uuid, etc are types Lookbook is using. They all implement the ubiquitous Serialize Deserialize traits, so we never again need to think about converting between our types and their on-disk format. Internally db-rs uses bincode format, an incredibly performant and compact representation of your data.

What’s cool here is that when you query out of a table, you’re handed pointers to your data. The database isn’t fetching bytes, serializing them, or sending them over the wire for your program to then shuffle into its fields. A read from one of these tables is a direct memory access, and because of Rust’s memory guarantees, you can be sure that reference will be valid for the duration of your access to it.

What’s exciting from an ergonomics standpoint is that your schema is statically known by your editor. It’s not defined and running on a server somewhere else. So if you type db. you get a list of your tables. If you select one, then that table-type’s contract is shown to you, with your keys and values. Additionally for us, now our backend stack doesn’t require any container orchestration whatsoever: you just need cargo to run our server. This has been massive boon for quickly setting up environments whether locally or in production.

The core ideas of the database are less than 800 lines of code and are fairly easy to reason about. This is a database that’s working well for us not because of what it does, but because of all the things it doesn’t do. And what we’ve gained from db-rs is a tremendous amount of performance and productivity.

Ultimately this is a different way to think about scaling a backend. When you string together 2-4 pieces of infrastructure over the network, you’re incurring a big latency cost, and hopefully what you’re gaining as a result is availability. But are you? If you’re using something like Postgres, you’re also in a situation where your database is your single point of failure. You’ve just surrounded that database with a lot of ceremonies, and I’m skeptical that the ceremony helps Postgres respond to queries faster or that it helps engineers deliver value more quickly.

db-rs has been running in production for half a year at this point. Most requests are replied to in less than 1 ms. we anticipate that on a modest EC2 node, we should be able to scale to hundreds of thousands of users and field hundreds of requests per second. Should we need to, we can scale vertically 1-2 orders of magnitude beyond this point. Ultimately our backend plans to follow a scaling strategy similar to email where users have a home server. And our long-term vision is one of a network of decentralized server operators. But that’s a dream that’s still quite far away.

As a result, what Lockbook ultimately converged on, is probably my new default approach for building simple backend systems. If this intrigues you, check out the source code of db-rs or take it for a spin.

Currently db-rs exactly models the needs of Lockbook. there are key weaknesses around areas of concurrency and offloading seldom accessed data to disk. Whenever Lockbook or one of db-rs’ users needs these things, they’ll be added. Feel free to open an issue or pull request!

db-rs (attempt 2)

When Lockbook first began, it’s architecture was I would consider the typical backend architecture. When a client would make a request, it would be load balanced to one of several api servers. The selected api server would communicate either Postgres, and S3 to fulfill that request. As we designed the product we understood our needs better and gradually re-evaluated various components of our architecture. We learned a lot through this process and although our needs are not unique, the architecture we’re converging towards is unique in it’s simplicity: a single api server, running a single Rust program. In part this architecture was enabled by an expressive, lightweight, embedded database I wrote called db-rs. Transitioning to this architecture allowed us to move much more quickly and we expect it to (conservatively) handle hundreds of requests a second made by hundreds of thousands of users all for around $50 / month. Today, for most typical projects, this would be my default approach.

Like most backends, we have users who have accounts, accounts manage our domain specific objects: their File. Both users and files have metadata associated with them, users have billing information, files have names, locations, collaboration information, size, etc.

We were finding that doing typical things in the context of a typical architecture was slow and annoying. For instance much of this data modeling and access would be done with SQL. Your backend however is certainly not written in SQL, so this requires some level of data conversion for every field that your server persists. There’s likely to be subtle mismatches around how your langauge handles types (limits, signs, encoding) or even meta ideas around types (nullability). You may try an ORM which has it’s own strengths and weaknesses. We also found that modeling certain ideas about files was hard to do at the database level: for instance, two files with the same parent cannot have the same name, unless one of them is deleted. Or even trickier: you cannot have a cycle in your file tree.

Maybe it’s silly to try to do this in SQL, so instead you read all the relevant data into your application and run your validations in your server and only write to the database once you’re satisfied with the state of your data. But make you’re up-to speed on your transaction isolation types! Oh also make sure no one writes to your database without going through your server first otherwise they may invalidate your assumptions.

Okay maybe you do want to do this in SQL then, so you write a complicated query in a language with very little support for things like tests or package manager. And hope that you’ve expressed your query and setup your tables in a manner that performs acceptably. Okay let’s say you’ve crossed all those hurdles, let’s setup some environments: you can have your team install postgres directly and field complaints about it being a pretty heavy application or you can containerize it and field complaints about docker instead. In production you have to determine whether you need pg_bouncer for connection pooling. Okay what about configuring Postgres itself for production, can I just run Postgres on a Linux instance? Nope (from postgres.org):

PostgreSQL ships with a basic configuration tuned for wide compatibility rather than performance. Odds are good the default parameters are very undersized for your system.

Not too bad once you read through, but after some reflection this was the sort of thing slowing our team down. We had similar interactions with our load balancer and S3. In the past we’ve had similar intereactions with tools we’ve seen our day-jobs use at scale. We were ready to try something new to see if we’d have different outcomes. Our application code itself, while complicated, was a tiny fraction of the total request time. We re-architected to eliminate all network traffic from our server, instead of Postgres initially we used a mutex, a bunch of hash tables, and an append only log. Instead of S3 we saved files locally using EBS. We configured our warp rust server to directly handle tls connections rather than our load balancer. Our latencies across all our endpoints were down to less than 2ms without any attention paid to performance within our application layer. We realized we didn’t need concurrency, or horizontal scalability just for it’s own sake. We wanted our application to be able to scale to the userbase of our dreams, and bringing the latency of each endpoint down by several orders of magnitude was a far easier way to achieve that goal.

Inspired by the initial results I sat down to see how much progress I could do on the core idea. db-rs is what resulted. In db-rs you specify your database schema in Rust:

#![allow(unused)]

fn main() {

#[derive(Schema, Debug)]

pub struct CoreV3 {

pub account: Single<Account>,

pub last_synced: Single<i64>,

pub root: Single<Uuid>,

pub local_metadata: LookupTable<Uuid, SignedFile>,

pub base_metadata: LookupTable<Uuid, SignedFile>,

pub pub_key_lookup: LookupTable<Owner, String>,

pub doc_events: List<DocEvent>,

}

}A single source of truth, version controlled alongside all your other application logic. The types you see are your Rust types, as long as your type implements the ubiquitous Serialize Deserialize traits you won’t have to write any conversion code to persist your information. You can select a table type with known and straightforward performance characteristics. Everything within the database is statically known. So all your normal rust-related tooling can easily answer questions like “what tables do I have”, how do I append to this table? What key does this query expect? What value will it give me?

Moreover, when you query, you’re handed references to data from within the database, resulting in the fastest possible reads. When you write, your data is serialized in the bincode format, an incredibly performant and compact representation of your data, persisted to an append-only-log, one of the fastest ways to persist a piece of information generally.

As a result of this new way of thinking about our backend, we don’t have to learn the nitty gritty off:

- SQL

- Postgres at scale

- S3

- Load balancers

Locally using this database is just a matter of cargo run’ing your server, which is a massive boon for iteration speed and project onboarding. People trying to self host lockbook (not a use case fully supported just yet, but a priority for us) are going to have a significantly easier time doing so now.

If you’re primarily storing things that could be stored within Postgres, and are writing a Rust application, the productivity and performance gains are likely going to be very similar for you. If you had a reference to all your data and could easily generate a response to a given API request within 1ms you’re likely also looking at a throughput of hundreds of requests per second. If you’re an experienced Rust developer think about how quickly you could get a twitter clone off the ground.

If this intrigues you, checkout the source code of db-rs or take it for a spin. The source code is less than 800 significant lines of code, and currently reflects the exact needs of Lockbook. It’s very possible that it falls short for you in some way, for instance currently your entire dataset must fit in memory (like Redis), this is fine for Lockbook for the next year or so, but will one day no longer be okay. If this is a problem for you, feel free to open an issue or pull request!

Lockbook’s architectural history (attempt 1)

Today Lockbook’s architecture is relatively simple: we have a core library which is used by all clients to connect to a server. Both core and server are responsible for managing the metadata associated each file and it’s content. Our server is a single mid-sized ec2 instance, and makes no network connections for file-related operations. Our core library communicates directly with our server. Operations that may be traditionally handled by a reverse proxy (ssl connection negotiation, load balancing, etc) are handled by a single rust binary. Our stack achieves throughput and scale by being minimal and fast: our server responds to all file related requests with sub-millisecond latency.

Our stack wasn’t always this lean, when we first set out our stack looked much more traditional: we used haproxy to load balance requests and provide tls between 2 server nodes. Our server stored files in s3 and metadata in postgres. In core we stored our metadata in sqlite. For most teams, out growing a simple tool usually takes the form of adopting a more complicated-full-featured version of that tool. For us, outgrowing a tool often involved taking a step back and creating a simpler version of the tool that fit our needs better.

File contents

Take s3 for instance. We found that interacting with s3 was becoming too slow, and a source of, albiet rarely, outages. We saw 3 paths forward:

- Invest deeper in s3. We could expose our users (encrypted) publicly, and have

coredirectly fetch files froms3instead of having our server abstract this away. - Make our architecture more complicated by caching s3 files somewhere.

- Have our server manage the files itself, locally.

With s3 we had a handful of crates that we could choose between. If we managed files ourselves (writing locally to a drive), we’d be programming against a significantly more stable and well understood api: Posix System Calls. We could use ebs to make various tradeoffs for performance and cost. We would have a slight increase in code complexity as we’d need to learn how to do atomic writes (write the file somewhere temporarily, and atomically rename it when the write is complete). But we’d have a significant decrease in overall engineering complexity:

- no need to learn about s3 specific concepts (access control, presigned urls, etc).

- no need to simulate s3 in environments where using s3 is infeasible (local development, CI, on-prem deployments). No need to wonder if there’s subtle differences between various s3 compliant products.

- significantly smaller surface area of failure.

File metadata

Initially file metadata was stored in Postgres, and to better understand why we moved away from Postgres, I should explain what our metadata storage goals are. When a user attempts to modify a file in some way we need to enforce a number of constraints. We need to make sure no one modifies someone else’s files, no one updates a deleted file, no one puts a tree in an invalid state (cycles, path conflicts, etc), and so on. Initially we tried to express these operations and constraints in SQL and after a couple rounds of iteration it was clear this wasn’t the right approach. Our SQL expressions were complicated, hard to maintain, and the source of many subtle bugs.

So we took a step back and moved significant amounts of our logic into rust. The flow of a request was now, a user is trying to perform operation X, fetch all relevant context from the database, perform the operation, run validations, persist the side-effects. This moved most of the complexity back into rust where we could easily write tests, use 3rd party libraries, and iterate quickly with a compiler.

Even with this refactor, we were still largely unsure about our usage of Postgres. Managing Postgres at scale is non-trivial, the surface area of learning how to configure postgres to keep more data in memory and juggle multiple parallel connections (pg-bouncer) is pretty large. Additionally the local development experience of Postgres was pretty poor, it either involved a deep install on your system, or nescesitated containers. And ultimately there were subtle differences between how it may be configured locally and in production, differences which could meaningfully impact the way queries executed. Finally we were willing to do more up-front thinking about how we would store and access data. We didn’t need the flexibility of SQL, and found ourselves facing more problems due to the declarative nature of SQL.

Since most of the complicated parts were in Rust, switching to Redis was a fairly inexpensive engineering lift. It was significantly easier to reason about how Redis would behave in various situations and manage it at scale under load. Redis was dropped in as a replacement to Postgres, and with this replacement we were able to eliminate an organization wide dependency on docker. Another set of associated concepts we’d no longer need to reason about to achieve our goals. Our team experienced a vastly better local development experience from this change.

It was now time to pay attention to core. Core shares similar goals to our server with regards to the operations it’s trying to perform, but it is additionally constrained by requiring an embedded database and is sensitive to things like startup time and resource requirements. Core also needs to be easy to build for any arbitary rust target, the ideal database would probably be a pure Rust project. Our journey started with SQLite and was a bumpy one initially for some of our compilation targets. But the journey ended the moment we were no longer interested in expressing complicated operations in SQL. Informed by our server-side experience, we left the problem intentionally unsolved for a while as we invested in other areas of the project. We simply persisted our data in a giant JSON files. As we expected while we were in the early days we experienced issues of data corruption as sometimes our writes would be interrupted or multiple processes sharing a data directory would cause data-race-conditions.

db-rs

After investing in other areas of our project I had done a lot of thinking around databases, especially around what would be ideal for a project that wanted to express as much in Rust as possible. I wanted a database that was fast by virtue of minimalism. For instance, simply being embedded affords your application a massive amount of throughput. For our project, this also meant that we could just stick our database behind a Mutex and significantly reduce the number of problems we’re trying to solve at the moment. I wanted a database that was designed with rust users in mind and ultra-low-latency. I also wanted to provide rust users with an ergonomic way for users to express a schema, with rust types (not database specific types) and not have them worry about serialization formats.

We needed a database that was:

- embedded

- fast

- ergonomic

- durable

So in about a week I created db-rs.

Once db-rs existed, with the abstractions present in core and server, it was easy to drop it in. Once again, this simplification boosted performance massively, simplified our code, and simplified our infrastructure, and reduced the number of foreign concepts that our team needed to understand and fbuild around.

With the request latency the lowest we’d ever seen it, without any significant effort to optimize our code (just eliminate things we didn’t want), we also eliminated nginx and just had warp perform tls handshakes and commit to a single server node for the near future. We estimate this modestly priced ec2 instance ($50 / month) can handle hundreds of requests a second from hundreds of thousands of users. If we need to, we have a healthy amount of vertical scaling headroom. Beyond that, our long term plan involves a scaling strategy similar to what’s used by self-hosted email.

Today our only remaining project dependency for most work is just the rust toolchain. Local dev environments spin up instantly without the need for any container or network configuration. Deploying a server means building a binary for linux and executing it.

Lockbook’s Editor

Building a complex UI element in Rust that can be embedded in any UI framework

As Lockbook’s infrastructure stabilized, we began to focus on our markdown editing experience. Across our various platforms, we’ve experimented with a few approaches for providing our users with an ergonomic way to edit their files. On Android, we use Markwon, a community-built markdown editor. On Apple, we initially did the same thing but found that the community components didn’t have many of the features our users were asking for. So as a next step, we dove into Apple’s TextKit API to begin work on a more ambitious editor.

Initially, this was fine, but as we worked through our backlog of requests, I found things that were going to be very time-expensive to implement using this API. We were having performance problems when editing large documents. The API was difficult to work with, especially because there were no open existing bodies of work that implemented features like automatic insertion of text (when adding to a list), support for non-text-characters (bullets, checkboxes, inline images), or multiple cursors (real-time collaboration or power user text editing). Even if we did invest the effort to pioneer these features using TextKit, we would have to replicate our efforts on our other platforms. And lastly, none of my other teammates knew the TextKit API intimately, so I wouldn’t be able to easily call on their help for one of the most important aspects of our product. We needed a different approach.

In the past, I’ve discussed our core library – a place we’ve been able to solve some of our hardest “backend” problems and bring them to foreign programming environments. We needed something like this for a UI component we needed a place where we could invest the time, build an editor from the ground up, and embed it in any UI library.

We considered creating a web component. Perhaps we could mitigate some of the downsides of web apps if we were only presenting a web-based view when a document was loaded. Maybe we could leverage Rust’s great support for web assembly for the complicated internals. Ultimately I felt like we could do better, so I continued thinking about the problem. On Linux, we’d begun experimenting with egui: a lightweight, Rust, UI library. Their README had a long list of places you could embed egui, and I wondered if I could add SwiftUI or Android to that list.

And so began my journey of gaining a deeper understanding of wgpu, immediate mode UIs, and how this editor might work on mobile devices.

Most UI frameworks have an API for directly interfacing with a lower-level graphics API. In SwiftUI, for instance, you can create an MTKView which gives you access to MetalKit (Apple’s hardware accelerated graphics API). Using this view, you can effectively pass a reference to the GPU into Rust code and initialize an egui component. In the host UI framework you can capture whichever events you need (keyboard & mouse events for instance) and pass them to the embedded UI framework. It’s the simplicity of immediate mode programming which enables this to be achievable in a short period, and it’s the flexibility of immediate mode programming which makes it a great choice for complex and ambitious UI components. The approach seemed like it held promise so we gave it a go.

After a month of prototyping and pair programming with my co-founder Travis, we had done it. We shipped a version of our Text Editor on macOS, Windows, and Linux which supported many of the features our team and users had been craving. The editor was incredibly high-performance, easily achieving 120fps on massive documents. Most importantly we have a clear picture of how we would go about implementing our most ambitious features over the next couple of years.

After we released the editor on the desktop, we began the process of bringing it to mobile devices. This was a new frontier for this approach. On macOS, we just had to pass through keyboard and mouse events. On a mobile device, there are many subtle ways people can edit documents. There are auto-correct, speech-to-text, and clever ways to navigate documents. After some research, we found a neatly documented protocol – UITextInput – which outlines the various ways in which you can interact with a software keyboard on iOS. We also found a corresponding document in Android’s documentation.

So back to work we went. We expanded on our SwiftUI <–> egui integration giving it the ability to handle events that egui doesn’t recognize. We piped through these new events, refined the way we handle mouse/touch inputs, and a couple of weeks ago, we merged our iOS editor bringing many of our gains to a new form factor.

We’re very excited about the possibilities this technique opens up for us. It allows us to maintain the look & feel that users crave while giving us an escape hatch down into our preferred programming environment when we need it. Once our editor is more mature and the kinks of our integration are worked out, we plan to apply this strategy to more document types. Long term we’re interested in making it easy for people to quickly spin up their own SwiftUI component backed by Rust (as presently this still requires a lot of boilerplate code).

On net, the editor has been a big step forward for us. It’s already live on desktops and will be shipping on iOS as part of our upcoming 0.7.5 release. It’s a large and fresh body of work, so we anticipate some bugs. If you encounter any, please report them to our Github issues. And, as always, if you’d like to join our community, we’d love to have you on our Discord server.

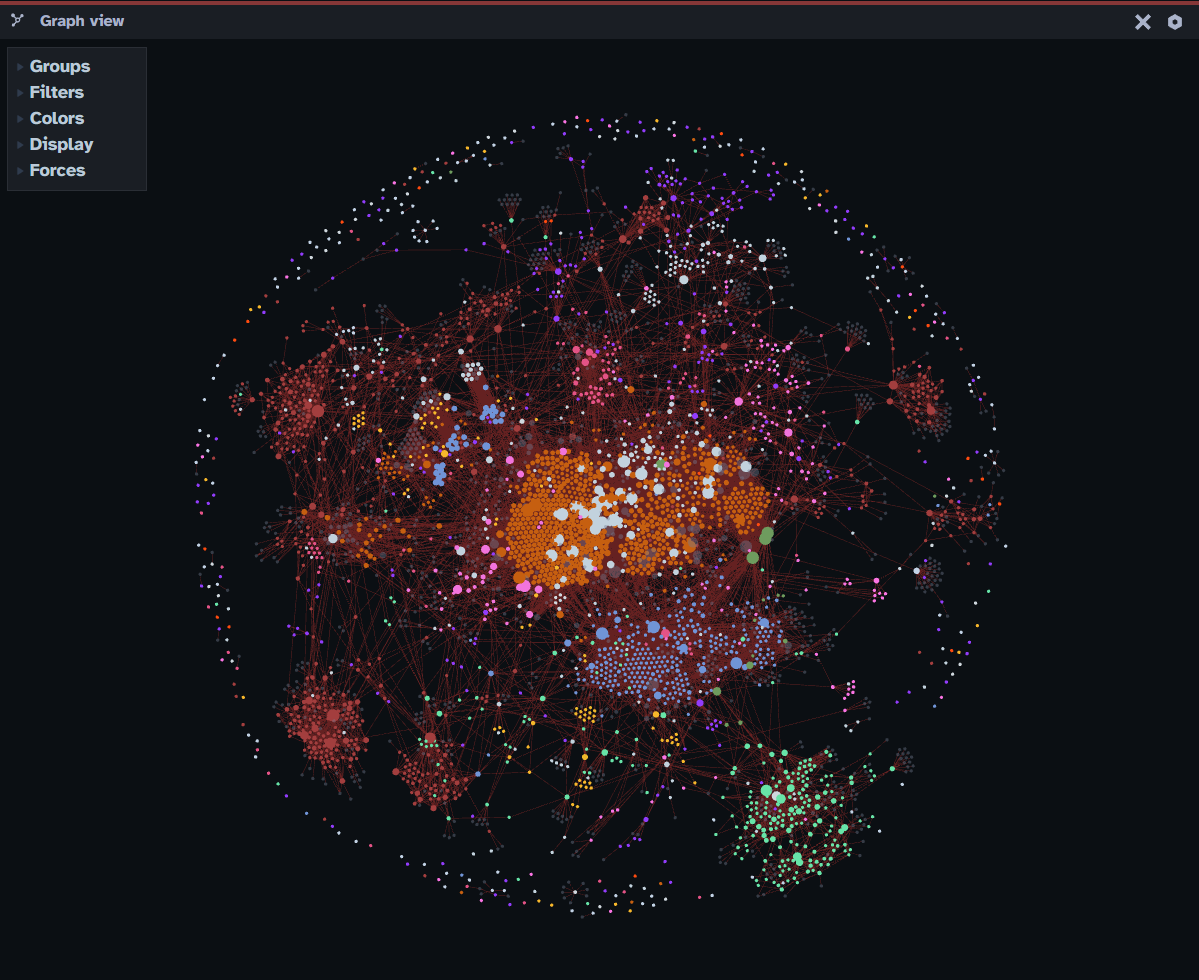

Defect hunting

When designing Lockbook we knew we wanted to support a great offline experience. To our surprise, this grew to become one of the largest areas of complexity. Forming consensus is an active area of research in computer science, but Lockbook has an additional constraint. Unlike our competition, large areas of complexity take place on our user’s devices that can’t update remotely. Additionally, the administrative action we can take is limited: most data entered by users is encrypted, and their devices will reject changes that aren’t signed by people they trust. All this is to say that the cost of error is higher for our team and it’ll likely take longer for our software to mature and reach stability. Today I’d like to share a tester we created to help us find defects and accelerate the maturation process. We affectionately called this tester “the fuzzer”. We’ll explore whether this is a good name a bit later, but first, let’s talk about the sorts of problems we’re trying to detect.

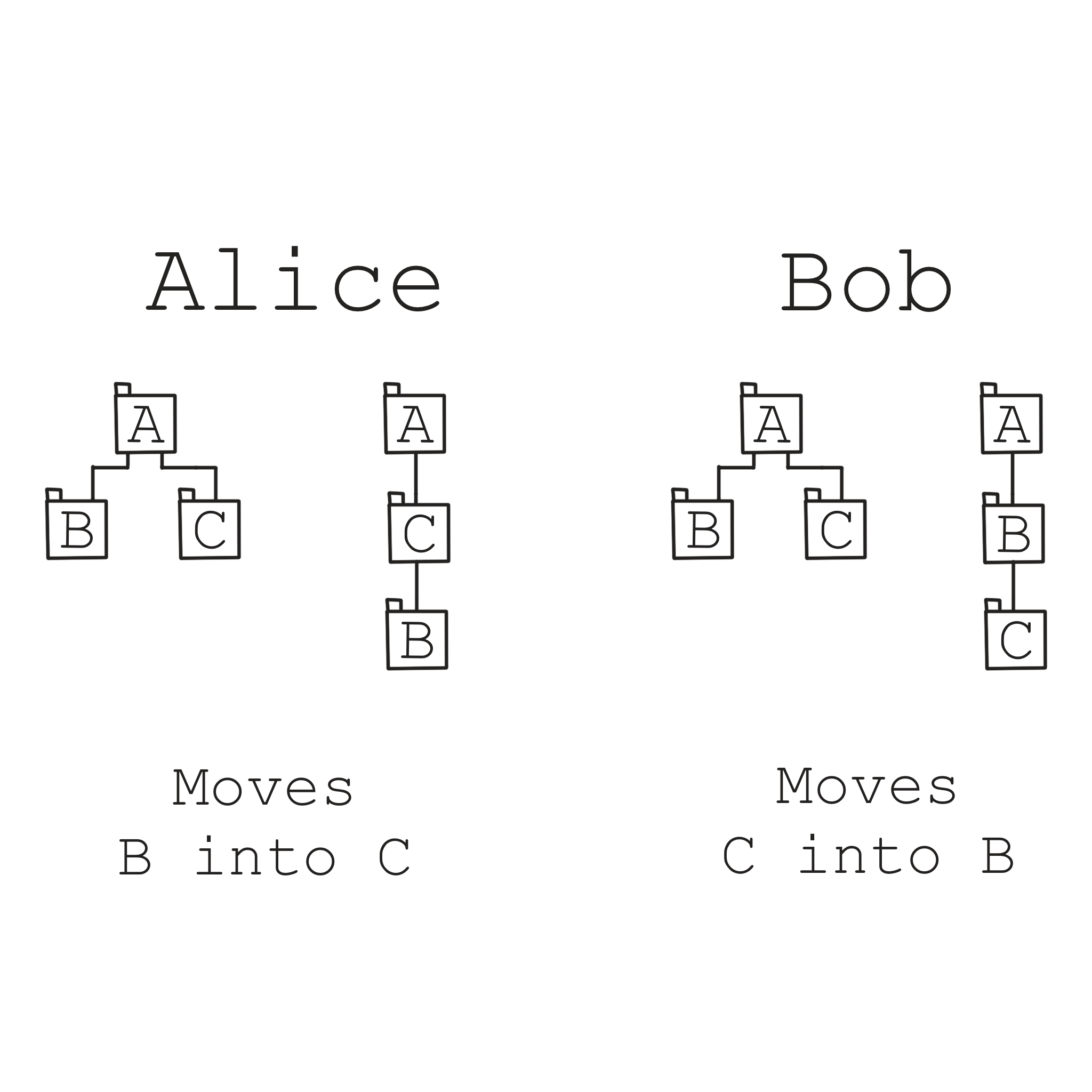

Users should be able to do mostly anything offline, so what happens if, say Alice moves a document and Bob renames that document while they were both offline? What happens if they both move a folder? Both edit the same document? What if that document isn’t plain text? What if Alice moves folder A into B, and Bob moves folder B into A?

Some of these things can be resolved seamlessly while others may require user intervention. Some of the resolution strategies are complicated and error-prone. The fuzzer ensures regardless of what steps a user (or their device) takes various components of our architecture always remain in a sensible state. Let me share some examples:

- Regardless of who moved what files and when, we want to make sure that your file trees never have any cycles.

- No folder should have two files with the same name. Creating files, renaming files, and moving files could cause two files in a given location to share a name.

- Actions that change a file’s path or sharees could change how our cryptography algorithms search for a file’s decryption key, we want to make sure for the total domain of actions your files are always decryptable by you.

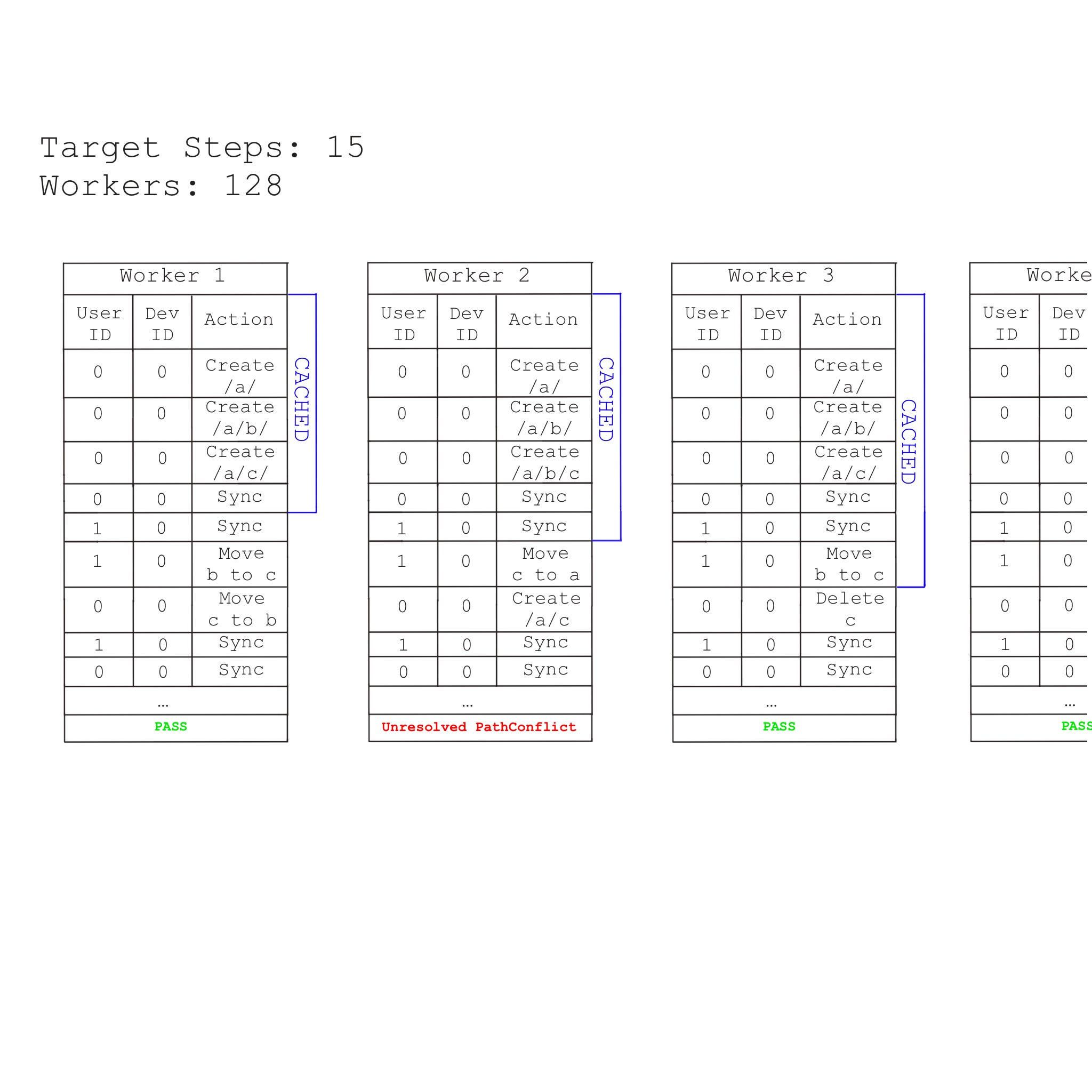

As we used our platform we’ve collected many such validations that we want to ensure never occur for the global set of actions on our platform, and the fuzzer’s job is to spit out test cases that violate these constraints. It does this by enumerating all the (significant) actions a user can take on the platform across N devices and with M collaborators. It randomly selects from this space and at each step it asks all parts of our system to make sure everything is still as it should be. It does this process in parallel fully utilizing a given machine’s parallel computational resources. It travels through the search space in a manner that limits the amount of recomputation of known good states, fully utilizing a given machine’s memory.

Generally when people say they’re fuzzing, they mean handing a given function or user input randomized input to try to produce a failure. Our fuzzer captures that spirit of this but is different enough that plenty of people have raised an eyebrow when I told them we call this process fuzzing. Unfortunately test simulator isn’t as cool a name, knowing what this process is now, if you can think of a cool name, do tell us.

Today our highly optimized fuzzer executes close to 10,000 of these trials per second, however initially it was just a quick experiment I threw together to gain confidence in an early version of sync. This was written when we were still using an architecture that used docker-compose to spin up nginx, postgres, and pgbouncer locally. The fuzzer almost immediately found some bugs. We fixed these bugs, and the value of the fuzzer was made apparent to us. The time between defects started to grow and so did the intricacies of the bugs the fuzzer revealed. As a background task, we continued to invest in the implementation of the fuzzer, and the hardware it ran on. As our architecture became faster, so did the fuzzer alongside it. Today the fuzzer has been running continuously for months and has verified 10s of billions of user actions, a promising sign as we get ready to begin marketing our product.

Below are some of the fuzzer’s key milestones. If you’re interested in browsing the most recent implementation you can find it linked here.

15 Trials Per Second

Initially, we were running the fuzzer on our development machines, kicking it off overnight after any large change. Our first big jump in performance came from deciding to run it on a dedicated machine and trying to fully utilize that machine’s computational resources.

80 Trials Per Second

We first tried running our fuzzer on a dedicated server which had 80 vcpus. We purchased this machine for $600 in 2020. Most of our early optimization efforts centered around tuning Postgres to perform better.

250 Trials Per Second

Our next largest jump in performance was when we made the switch from Postgres to Redis, and upgraded the hardware that the fuzzer runs on. After 2 years of faithful service, our Poweredge experienced a hardware failure which we weren’t motivated enough to diagnose. So in 2022 we pooled our resources for a 3990X Threadripper with 120 vCPUs.

900 Trials Per Second

Before [db-rs] there was [hmdb] which was similar in values to db-rs, but worse in execution. It still served our needs better than Redis and performed better as it was embedded in the process rather than something that communicated over the network. It additionally used a vastly more performant serialization protocol across the whole stack, inside [core] and our server.

4000 Trials Per Second

In late 2022 I wrote created a compile-time flag in [core] which allowed us to directly compile the entire server into core during test mode. This meant that instead of executing network calls for fetching documents and updates core was directly calling the corresponding server functions. At this point, no part of our test harness was using the network stack

10,000 Trials per second

Once db-rs was fully integrated into core and server, I added a feature to db-rs called no-io, which allowed core and server to enter a “volatile” mode for testing. This also allowed instances of core and server, and their corresponding databases to be deep copied. So when a trial ran, if most of the trial had been executed by another worker, it would deep copy that trial’s state and pick up where it left off.

Future of the fuzzer

Personally, the fuzzer has been one of the most interesting pieces of software I’ve worked on. If, like me, this piques your interest and you’re interested in researching ways to make it faster with us, join our discord.

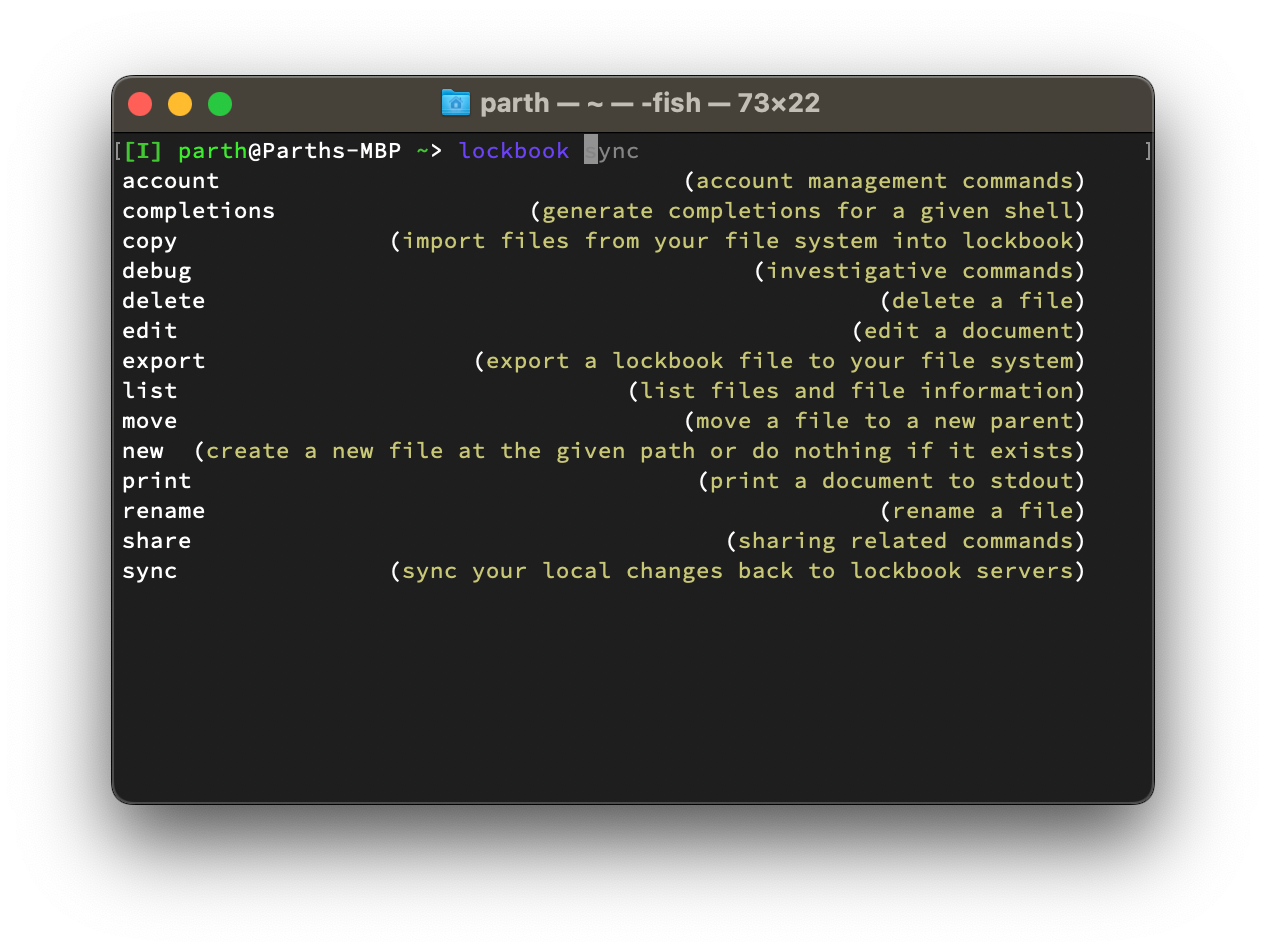

Creating a SICK CLI

At Lockbook we strongly believe in dogfooding. So we knew alongside a great, native, markdown editing experience we would want a sick CLI. Having a sick CLI creates interesting opportunities for a niche type of user who is familiar with a terminal environment:

- They can use the text editor they’re deeply familiar with.

- They can write scripts against their Lockbook.

- They can vastly reduce the surface area of attack.

- Can always maintain remote access to their Lockbook via SSH.

In this post I’m going to tackle 3 topics:

- What makes a CLI sick?

- How do you go about realizing some of those “interesting opportunities” using our CLI?

- What’s next for our CLI?

What makes a CLI sick?

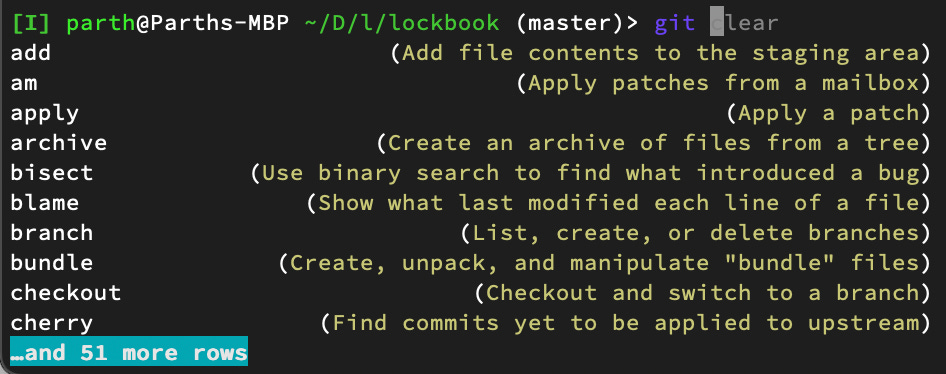

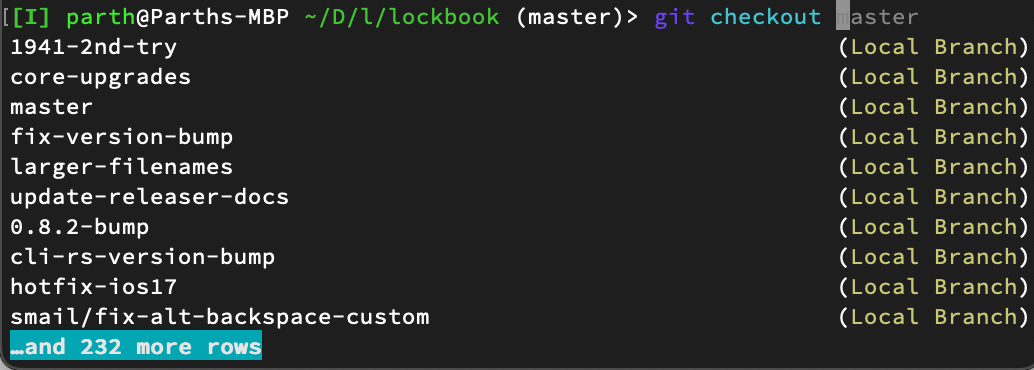

It’s tab completions. For me, tab completions are what I use to initially explore what a CLI can do. Later, if the CLI is sick, I use tab completions to speed up my workflow. I don’t just want to tab complete the structure of the CLI (subcommands and flags). I want to tab complete dynamic values, in Lockbook’s case, this means completing file paths and IDs.

If you’re creating a CLI most libraries make you choose between a few bad options:

If you’re creating a CLI most libraries make you choose between a few bad options:

- Hand-craft completion files for each shell.

- Sacrifice dynamic completions and just settle for automatically generated static completions.

Rust is no exception here, clap has some support for static completions, but no way to invoke dynamic completions without writing a completion file for each shell.

And so we set out to solve this problem for the Rust ecosystem, and created cli-rs. A parsing library, similar to clap but with explicit design priorities around creating a great tab completion experience. As soon as cli-rs was stable enough we re-wrote lockbook’s CLI using it so we could pass on these gains to our users.

Cli-rs is simple, you describe your CLI like this:

Cli-rs is simple, you describe your CLI like this:

#![allow(unused)]

fn main() {

Command::name("lockbook")

.description("The private, polished note-taking platform.")

.version(env!("CARGO_PKG_VERSION"))

.subcommand(

Command::name("delete")

.description("delete a file")

.input(Flag::bool("force"))

.input(Arg::<FileInput>::name("target").description("path of id of file to delete")

.completor(|prompt| input::file_completor(core, prompt, None)))

.handler(|force, target| delete(core, force.get(), target.get()))

)

.subcommand(

Command::name("edit")

.description("edit a document")

.input(edit::editor_flag())

.input(Arg::<FileInput>::name("target").description("path or id of file to edit")

.completor(|prompt| input::file_completor(core, prompt, None)))

.handler(|editor, target| edit::edit(core, editor.get(), target.get()))

)

...

}This gives the parser all the information it needs to offer the best tab completion behavior. It handles all the static completions internally and then invokes your program when it’s time to dynamically populate a field with your user’s data.

We also invested a ton of effort in the infrastructure that deploys our CLI to our customer’s machines so that tab completions would be set up for most people by default.

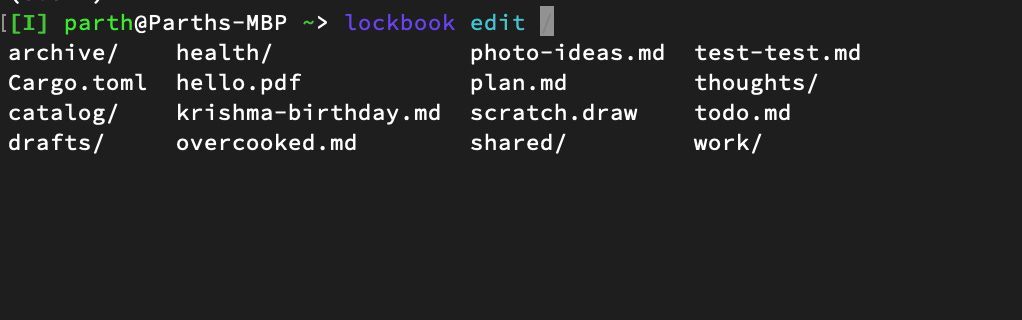

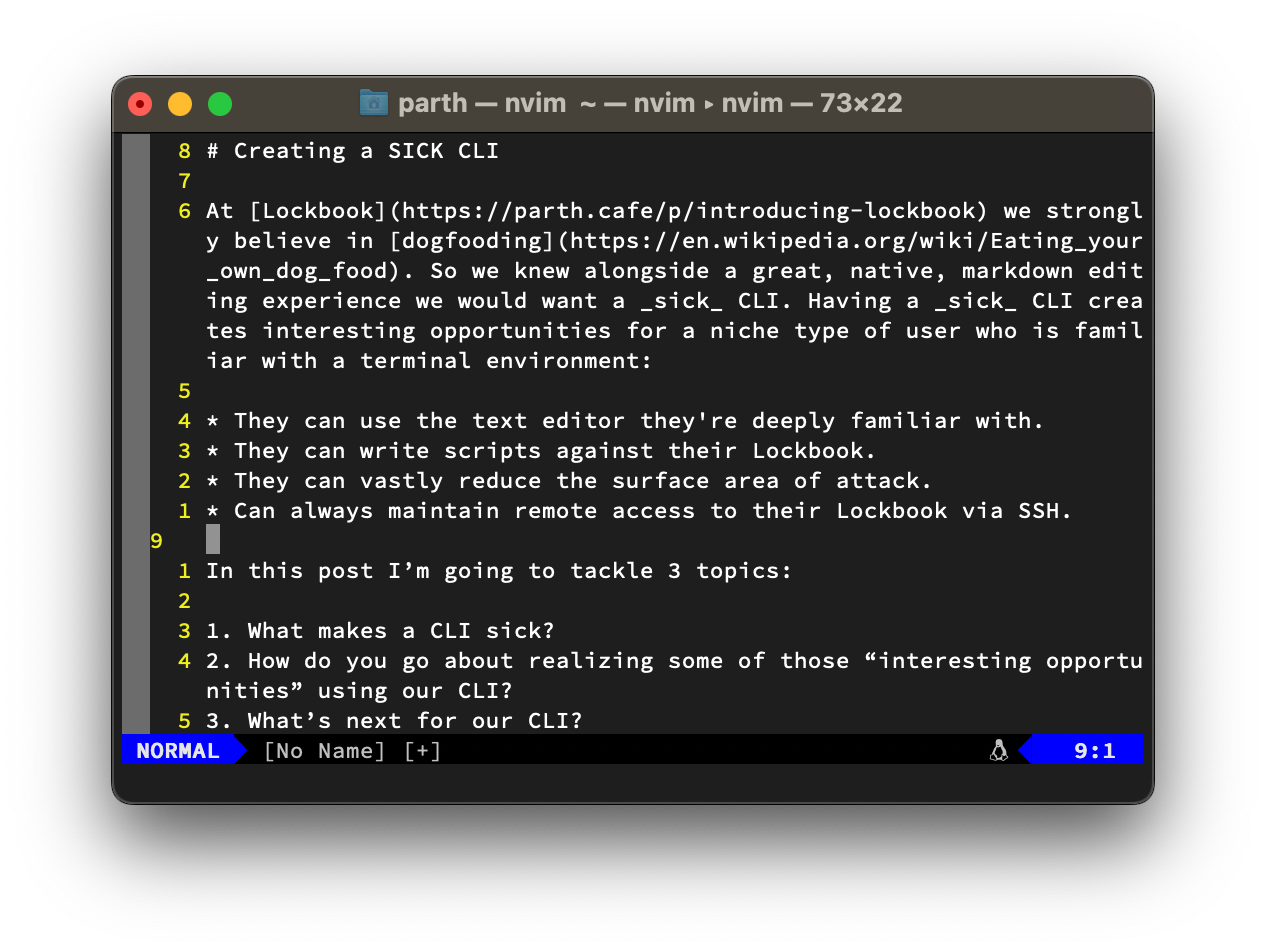

Exciting Opportunities for Power Users

Use your favorite text editor

You can lockbook edit any path you have access to and our CLI will invoke vim, utilizing any custom .vimrc that may exist. You can override the selected editor by setting the LOCKBOOK_EDITOR env var or using the --editor flag. So far we support vim, nvim, subl, code, emacs and nano.

If we don’t support your favorite editor, send us a PR or hop in our discord and tell us.

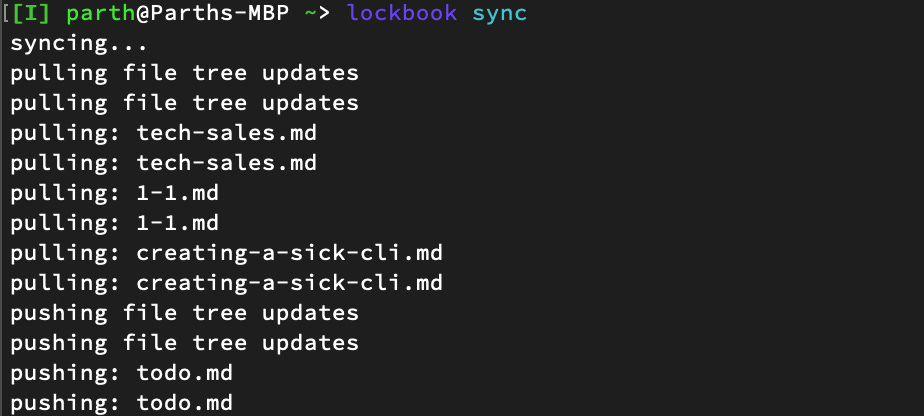

Extending Lockbook

We want Lockbook to be maximally extensible, this extensibility will take many forms, one of which is our CLI. Let’s explore some of the interesting things you can accomplish with our CLI.

Let’s say you wanted a snapshot of everything in your second brain decrypted and without any proprietary format for tin-foil-hat backup reasons. You can easily set a cron that will simply lockbook sync and lockbook backup however often you want. lockbook export can be used to write any folder or document from Lockbook to your file system, paving the way for automated updates of a blog. Edit a note on your phone, and see the change live on your blog in seconds. lockbook import lets you do the opposite. Want to continuously back up a folder from your computer to Lockbook? Setup a cron that will simply Lockbook import and then lockbook sync.

Ultra secure